Power Platform Self-Service Analytics

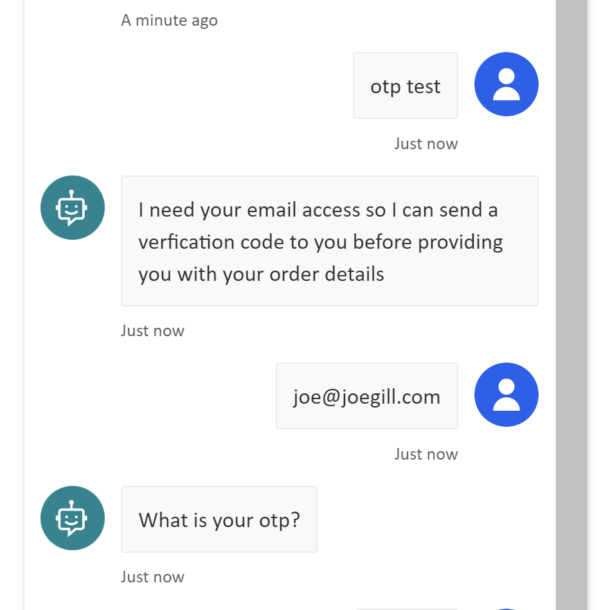

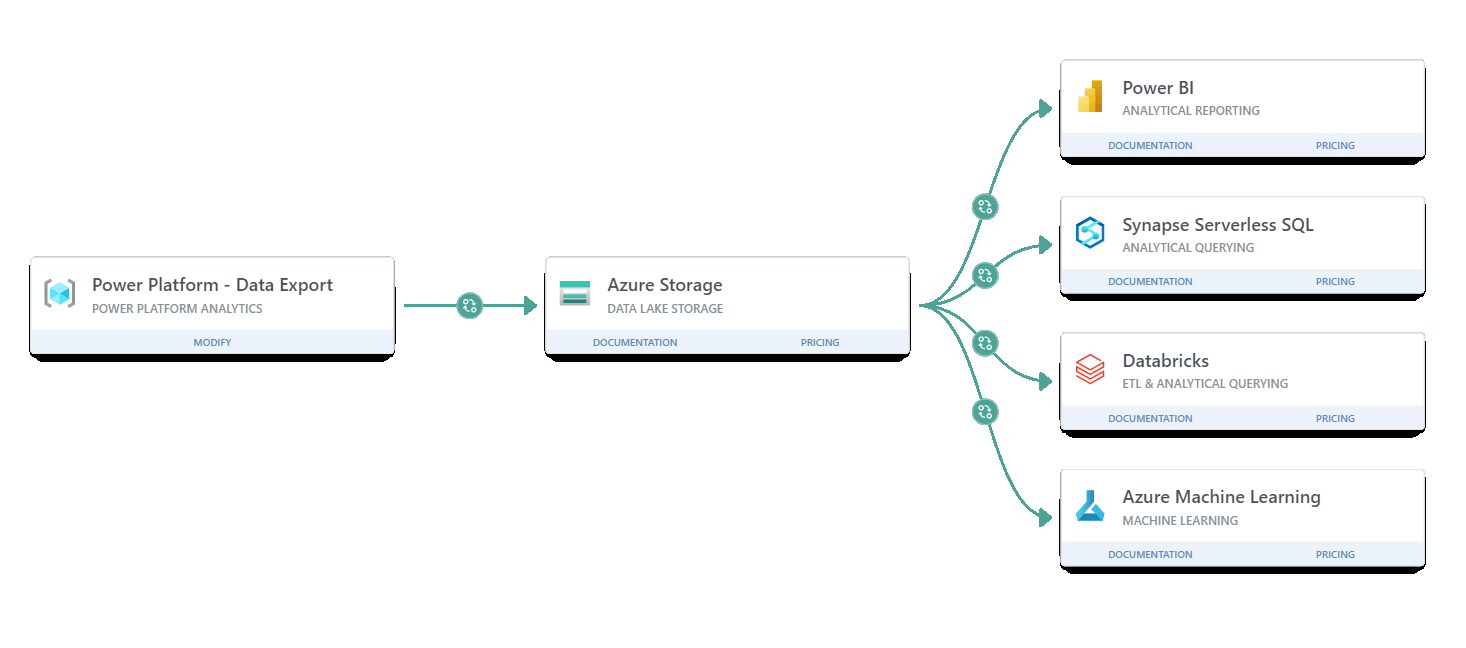

From the Power Platform admin center, you use the Data Export option to export the details on your Power Apps and Power Automate Flows to an Azure Data Lake. Once Data Export is configured the data is exported daily along with usage on your Power Apps and Flows. From the Data Lake, the data can be consumed by a variety of tools such as Power BI, Azure Data Factory, and Synapse Analytics for self-service analytics.

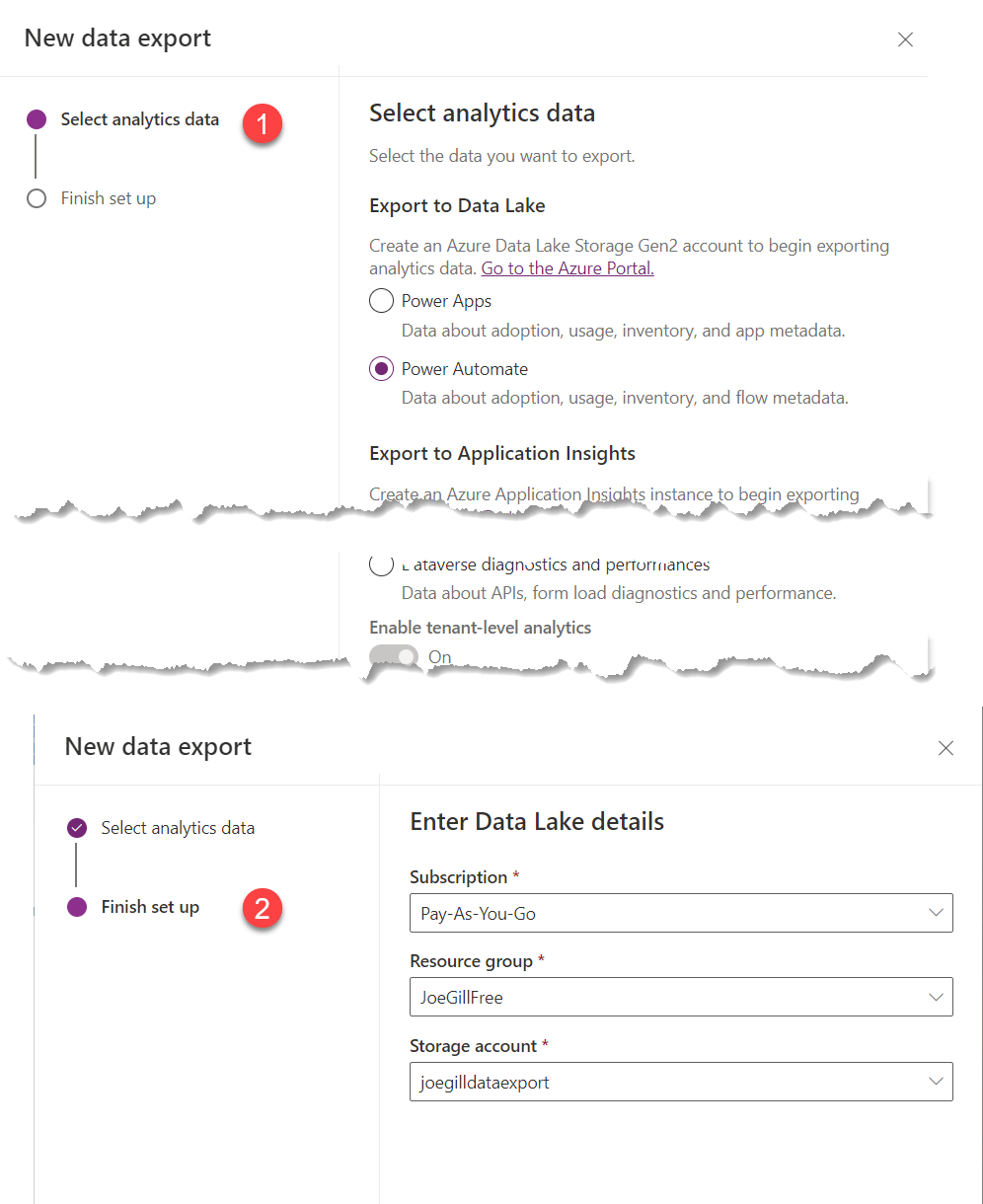

How to configure Data Export

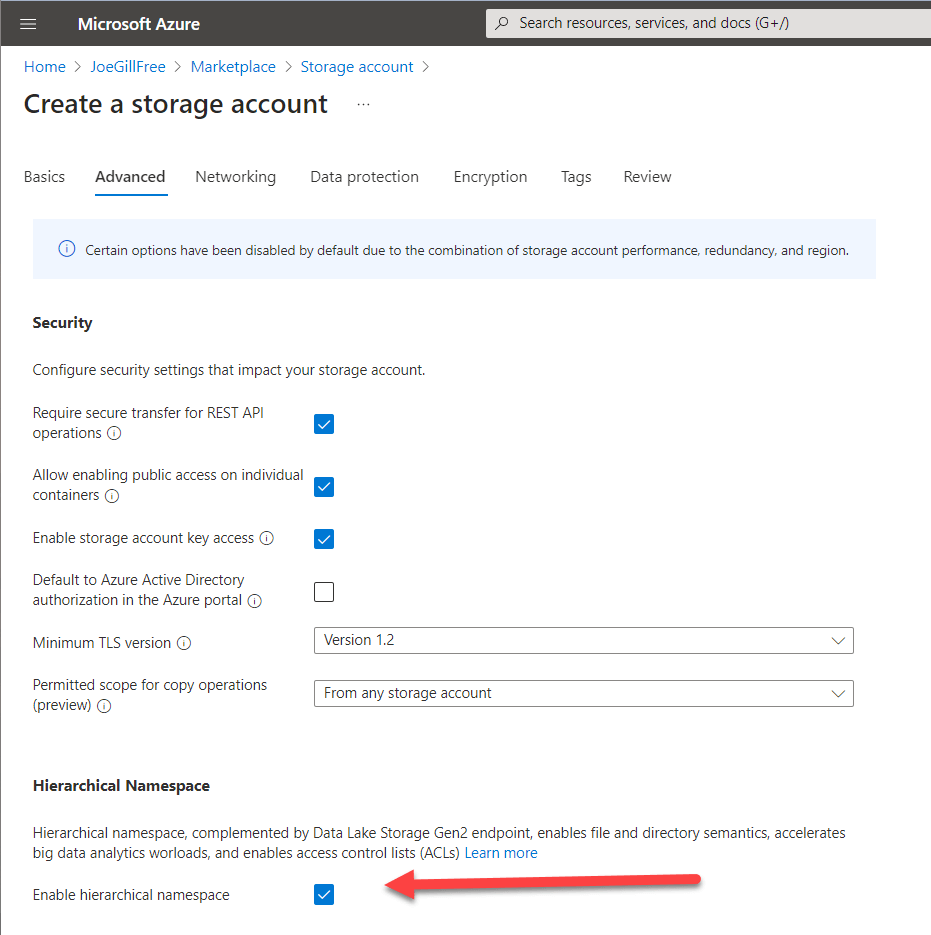

To set up Data Export, you will need an Azure subscription and have created an Azure Data Lake. Azure Data Lake is built on top of Gen2 Blob Storage, so all you need to do is to create a Blob Storage Account with Hierarchical Namespace enabled

Once you have created your storage account go to the Power Platform Admin Center. From the Data export option select if you want your Power Automate or Power Apps information exported and provide details on the storage account to be used. There are no options to filter which environments you want to be included in the export so it includes all the Power Apps and Flows inventory and usage data from your tenancy. I found it takes one to two days before the export starts pushing data to your Data Lake.

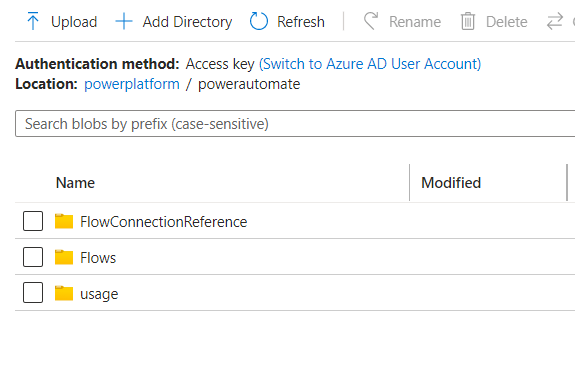

Once the Data Export process starts it will create a container called powerplatform with folders for powerapps and powerautomate. Hopefully, the folder structure is self-explanatory.

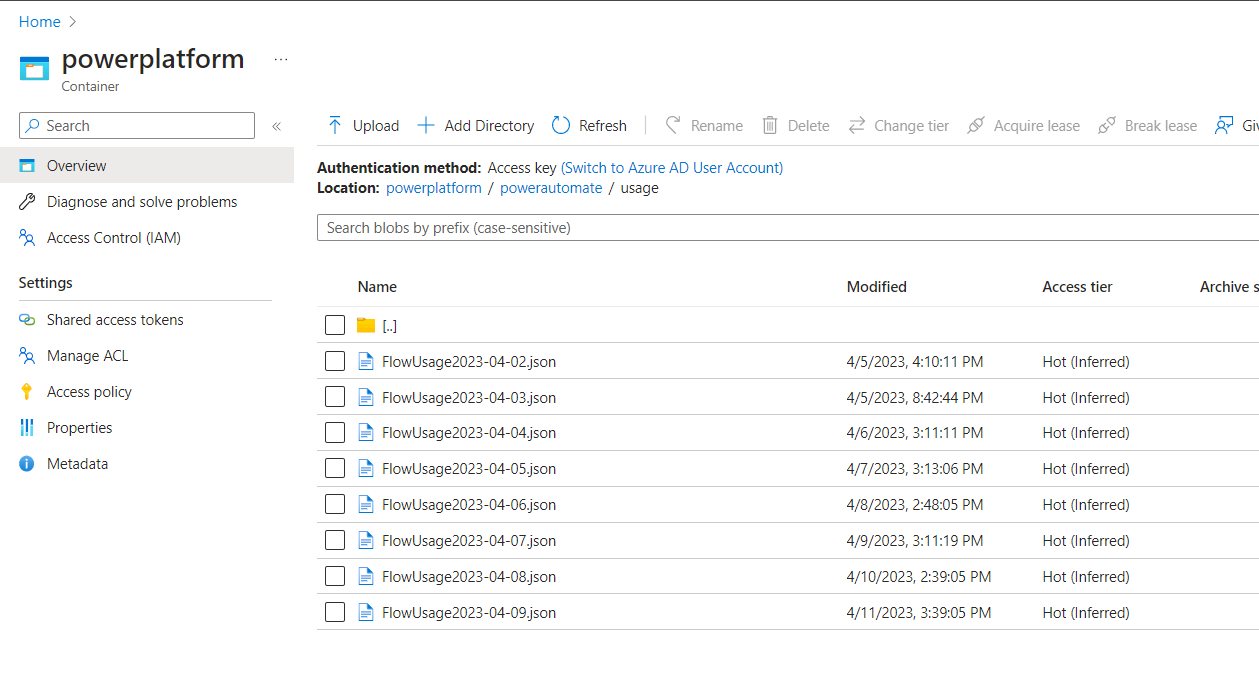

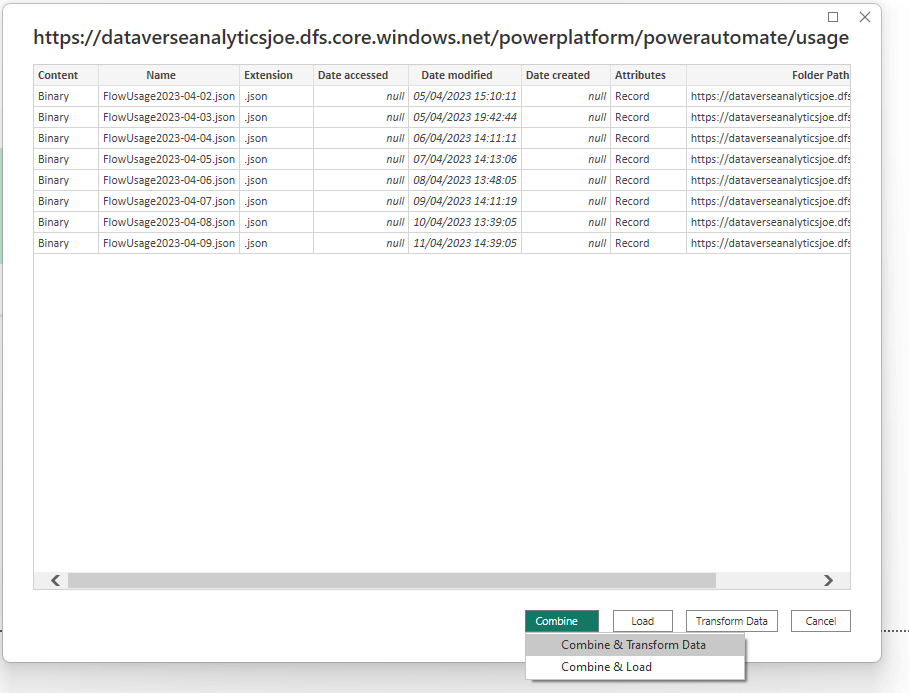

One folder that is likely to grab people’s attention is the “powerplatform/powerautomate/usage” folder Within this folder, you will discover a file for each day, with details on all Power Automate runs executed during that specific day. A new file is created automatically every 24 hours. In my tenant, the most recent usage data was from two days ago. It remains unclear whether this will improve once Data Export is officially released.

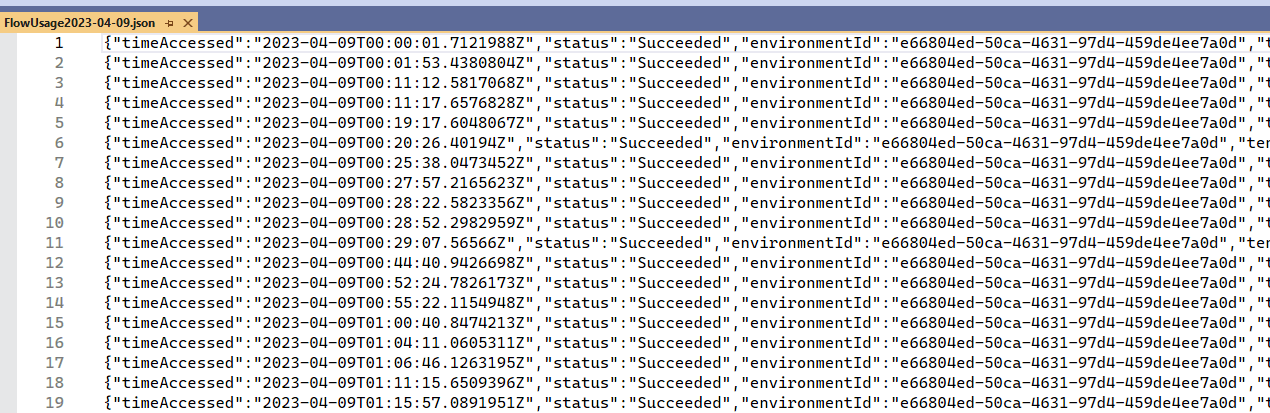

The Data Export service is similar to the Synapse Link for Dataverse service I have covered in previous posts. However, the analytics data is provided in JSON format rather than raw data like Synapse Link. JSON is referred to as semi-structured data which must be parsed before it can be used. Details on the schema can be found here. In the Flow usage files, each row in the file contains a JSON object with details on a flow run.

How to query your Power Platform Analytics from Power BI

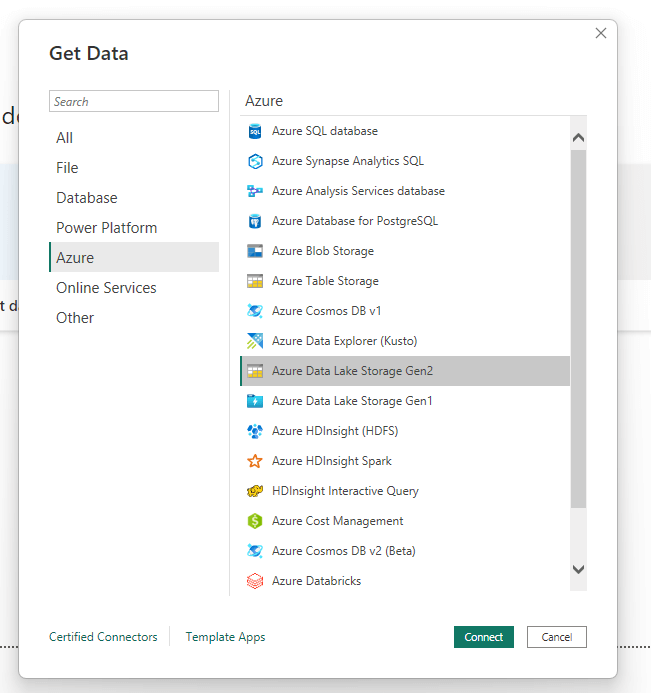

The data in your Data Lake can be consumed in multiple ways including using Power BI. If you want to query your data from Power BI select Azure Data Lake Storage Gen 2 as your source

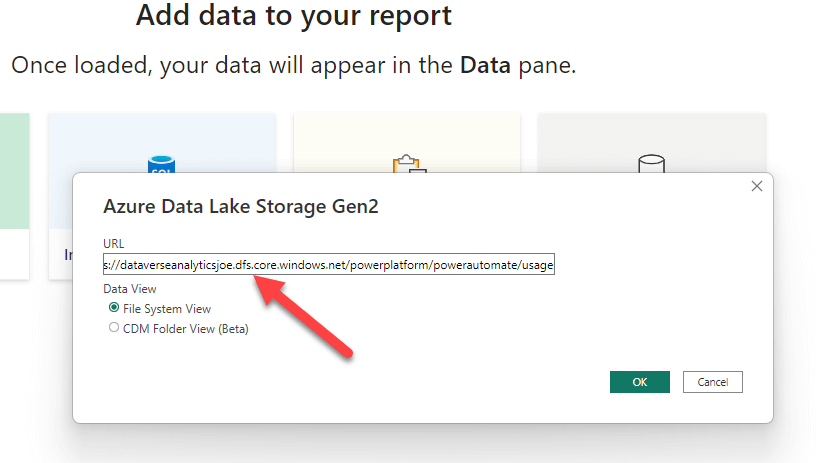

Enter the url of the folder where the data resides. Make sure your url has DFS in it rather than blob if you have copied it from the properties otherwise, you will get an error.

Once you authenticate your connection select Combine & Transform Data option.

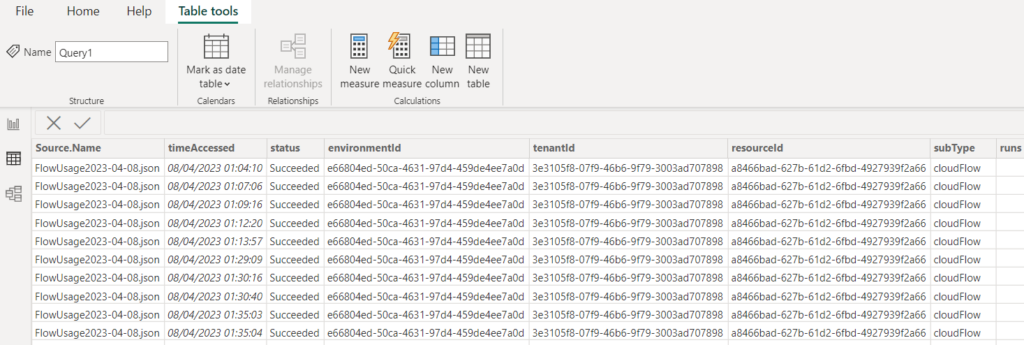

Once transformed you have access to your Pwer Automate flow usage data in Power BI. The information provided hopefully is intuitive, whereby the resourceId pertains to the workflow guid and the status column signifies whether the flow run was successful or unsuccessful.

Summary

While still in preview the ability to automatically export your Power Platform analytics to a Data Lake looks interesting. Once the data is in your data lake you can use a range of tools to analyze and surface the data. The possibilities are endless, you could query this data directly from the data lake or use Azure Data Factory to push it into a database or Dataverse.

You could also consider using the Power Platform Data Export option rather than the Microsoft Power Platform Center of Excellence for monitoring your Power Platform inventory and usage.